| SfM Plug-in has the functions below. Create point cloud from a video

Create point clouds based on the images extracted from photos and videos

taken by a digital camera

Display arrangement of the point crowd

Analyzed and output point clouds can be adjusted to be displayed properly

on UC-win/Road. It is available to rotate and adjust to the actual scale

and positioning. This makes it possible to combine and show plural point

cloud data and lap over VR models and point cloud data.

Caribration file of the camera

By taking the photo of the chess board and entering it, a file is created

to correct the distortion in the image through analyzing the distance

and features.

Create a Visual Words file

Visual Words file is created and output which are used at the time of the

point cloud creation.

|

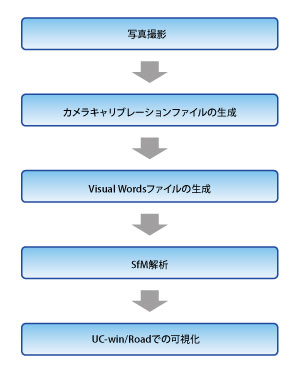

Fig. 1 Flow of the analysis Fig. 1 Flow of the analysis |

Taking photos

Take photos of the space where you want to create 3D point clouds by digital

camera. Plural photos must be taken from slightly different positions.

In addition, the camera property is necessary as a parameter for SfM analysis.

If the pictures don't have the EXIF information, you need take a photo

of a chess board pattern and create a calibration file.

-Exif (Exchangeable image file format): An image file format that can be

add the information about the shooting conditions to the digital camera

photo data. Metadata such as shooting date, model name, resolution, exposure

time, diaphragm value, focal length, ISO speed, and color space are saved

with the image.

Create a camera calibration file

Create a calibration file which calculates characteristics of the digital

camera by using the photo of the chess board pattern.

Create a Visual Words file

Create a Visual Words file which is needed for the quick judgement of the

similarity ratio during SfM analysis. Photos of the analysis object are

used for the creation. Because it takes an enormous amount of time to create

a Visual Words file, it is recommended that you use sample files originally

attached to the plug-in.

SfM Analysis

Import the photos, the camera calibration file, and the Visual Words file,

assign analysis conditions, and then perform the analysis.

Visualization in UC-win/Road

When the SfM analysis is run, view point positions, direction arrows, and

point clouds are displayed.

Fig. 2 Photo of the showroom Fig. 2 Photo of the showroom |

Fig. 3 Analysis result of the showroom Fig. 3 Analysis result of the showroom |

|